Test Automation Tech Test

I’ve been involved with interviewing candidates for tech roles for years at this point and have interviewed college graduates up to senior management, in Ireland and outside, virtually and face to face. I generally really enjoy meeting people and have refined the interview techniques I have to try and ensure candidates are as comfortable as possible but one thing I’ve spent a lot of time tweaking is gauging technical ability. I’ve delivered paper based tests, multiple choice code questions and whiteboard activities and with all of these, the common factor is just how abstract the tasks are and how unrelated they are to typical day to day activities.

The recent trend of moving towards longer exercises embedded within online platforms have introduced much more authentic experiences for interviewers to understand how the candidate would operate in the day to day activities of the role they are being interviewed for. Having been on both sides of these exercises, as interviewer and interviewee, I definitely prefer this over the theoretically challenging exercises that seem to be straight out of college text books.

However, the vast majority of these online platforms are specifically tailored towards classic software development. Recently I was tasked with setting up technical interviews for Quality Assurance(QA) Automation engineers and while assessing their ability to write code is certainly useful, dedicated exercises that target the QA mindset are ideal in this case and while I’ve found a handful of these exercises online, I haven’t convinced my recruitment team to spring for the budget for it. So like any reasonable person would do in that scenario, I built my own.

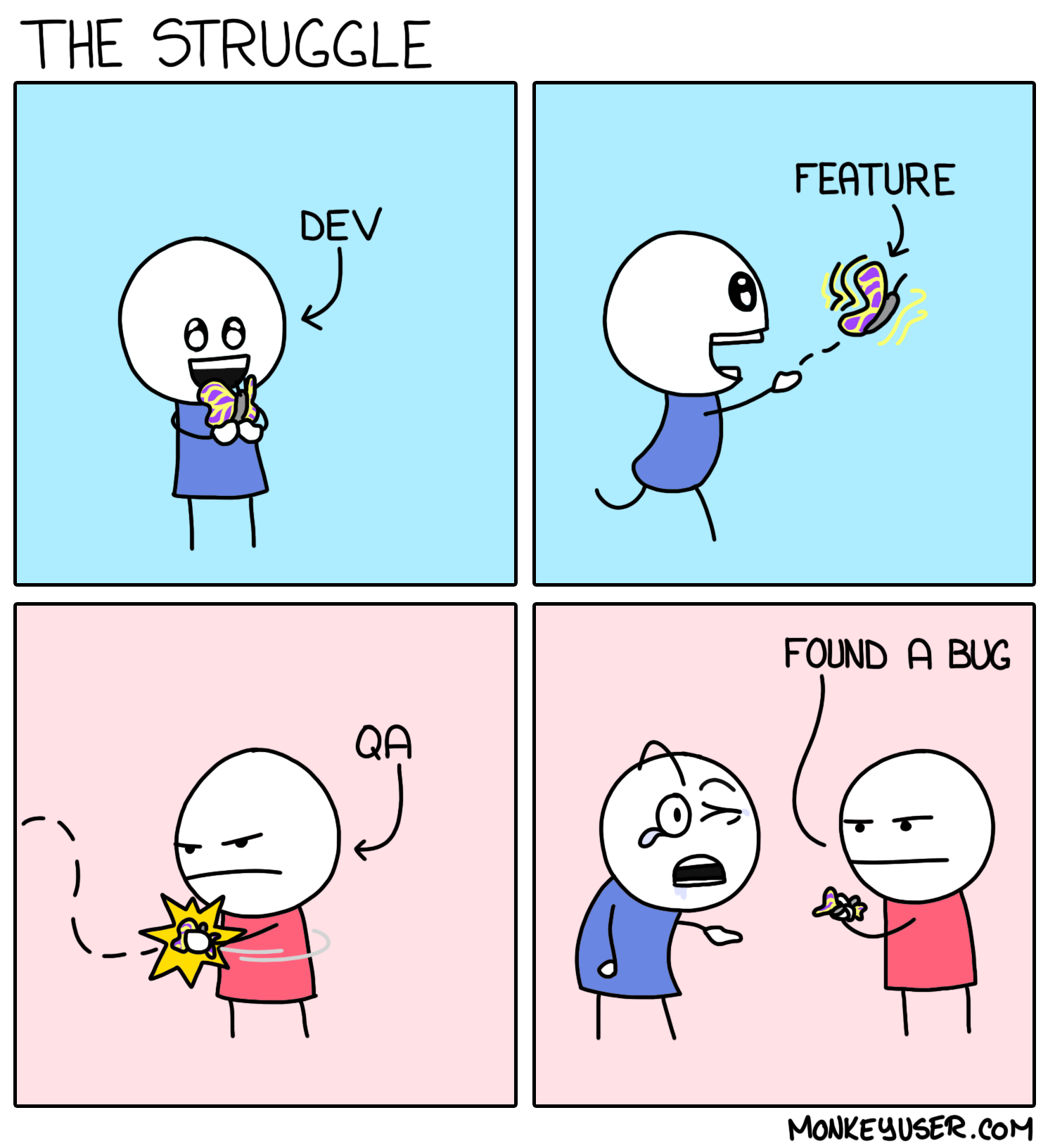

To understand why I wanted something alternate to the traditional test, the cartoon above will illustrate just how different the two mindsets are. Developers are focused on building new things and QAs are ready to find a way to break it. So for this technical assessment, rather than ask the QA engineer to build a business requirement, I built it and want my candidates to show me how they would automate the testing of this software.

The Assessment

The application the candidates will be asked to build a test framework for is a simple data pipeline. A CSV file is dropped in one AWS S3 bucket, this triggers a Lambda function to process the file and the records in it as long as there are no errors and then drops the file in JSON format into another S3 bucket. There are a series of Error codes available to trigger for file specific issues (i.e. empty files, binary files) and then there is a series of error codes to help catch errors in the Data schema of the records in the input file.

This is not an exceptionally complex application but this is more than enough to task a QA engineer to build a test automation framework for. Things that I’d consider good for the candidate to tackle as part of their approach to this exercise:

- Environment Validation - Quick check to ensure that everything is in place before you try and do any validation, quick check to see if the buckets exist for example

- Input File Creation - Not only do we need a valid input file for happy path testing but, in order to ensure the error messages are being triggered correctly, a custom set of input records needs to be curated and that is the responsibility of the QA team.

- Output File Validation - This is in JSON format so there needs to be a translation function to ensure the inputs have mapped appropriately to the outputs

- Error Scenarios - There are a lot of potential error scenarios that have been coded for specifically, but a really impressive accomplishment would be to break the lambda function with a single record

- Non-Functional Tests - This generic heading handles things like load testing, soak testing and performance testing, these are generally seen as nice to have so always good to see them considered

- Reporting of Results - Its one thing to do the tests in an automated fashion but reporting back the status is also important as the time is only saved if the review of the test results are also made to be efficient.

When this is delivered to candidates to review, one thing that I really miss from the popular commercial platforms is having the ability to limit the time available to the candidate. In most cases when these exercises are offered, the candidate has a number of days to tackle it but the platform starts a timer when you log in and you may have only 2 or 3 hours to get your effort submitted. With what I’ve built, I have a document with the details of the assessment and the deadline for when it’s due. The concern here is that candidates will spend a huge amount of their personal time on this exercise which is not good for their work-life balance but also skews the amount of work delivered.

If you want to see more details on this application, including steps on how to set it up for your own candidate interviews, feel free to visit my github where the details are stored here -> https://github.com/RedXIV2/TestAutomationTechnicalTest