Configuration Management Tool Comparison

As part of my masters, I did my thesis on comparing a collection of the most common configuration management tools on the market for speed of execution, cost and ease of implementation. What I’ve detailed below is a summary of the findings I made, and if you’re interested in the learning more about the test framework, conditions and low level detail, please feel free to download the full thesis here.

While the initial intention of this work was to compare the 4 main players in the configuration management market at the moment, due to the time limitations around the thesis (and a job, life, kids etc), I wasn’t able to integrate all 4 tools completely within the test framework that I built for the purposes of this research. While I could get Puppet, Ansible and Salt integrated, Chef proved to be too complex to integrate into a framework built to be transient.

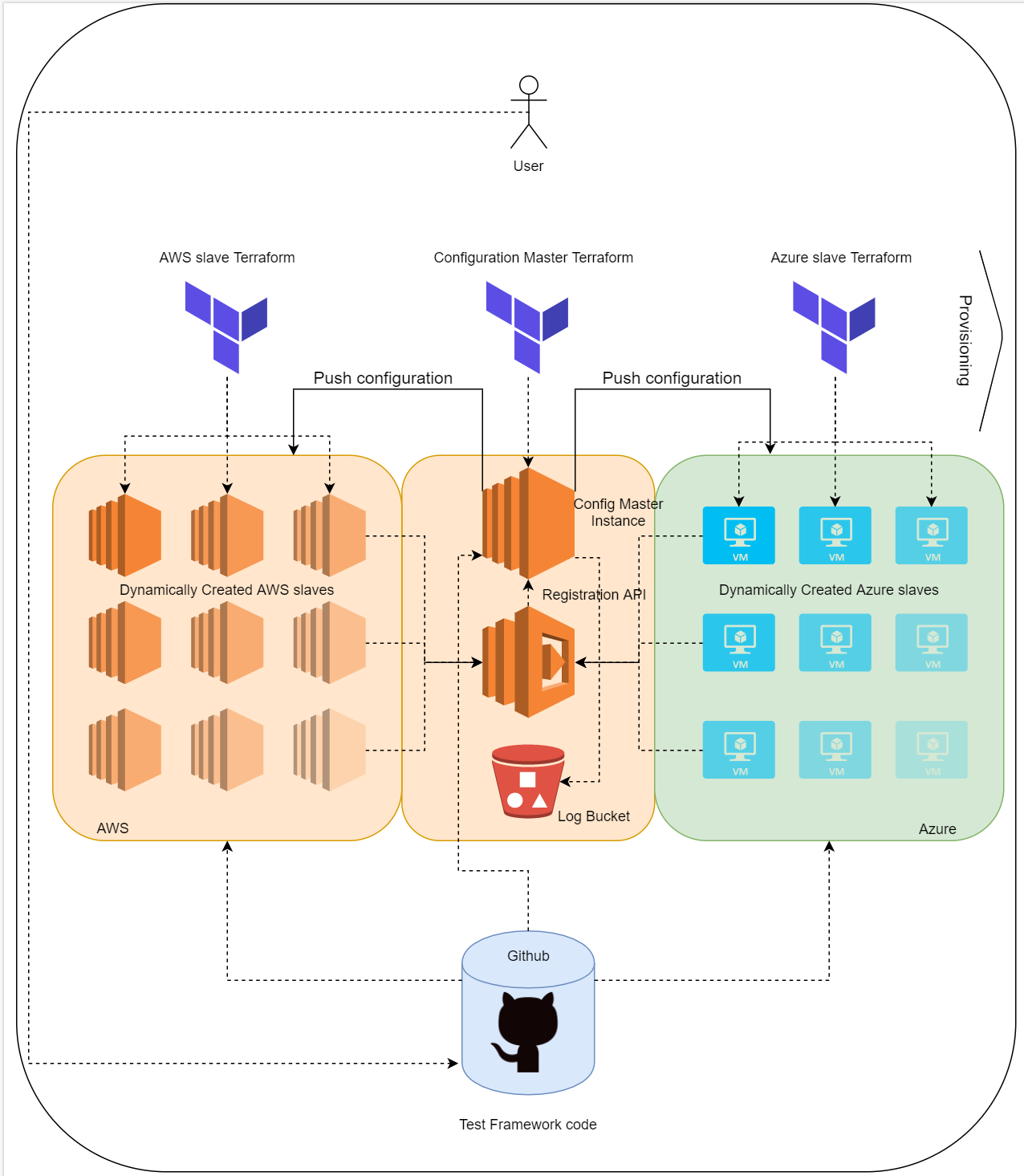

The framework itself is built using Terraform files to create slaves on both AWS and Azure, as well as a dedicated “ConfigMaster” which is used to deliver the configuration tool resources needed to execute the tests. Using Terraform’s count functionality, the number of slaves this can be tested with is very dynamic and the custom code built into the Registration API (a custom built lambda function) will upload the results to an S3 bucket using dynamic object prefixes.

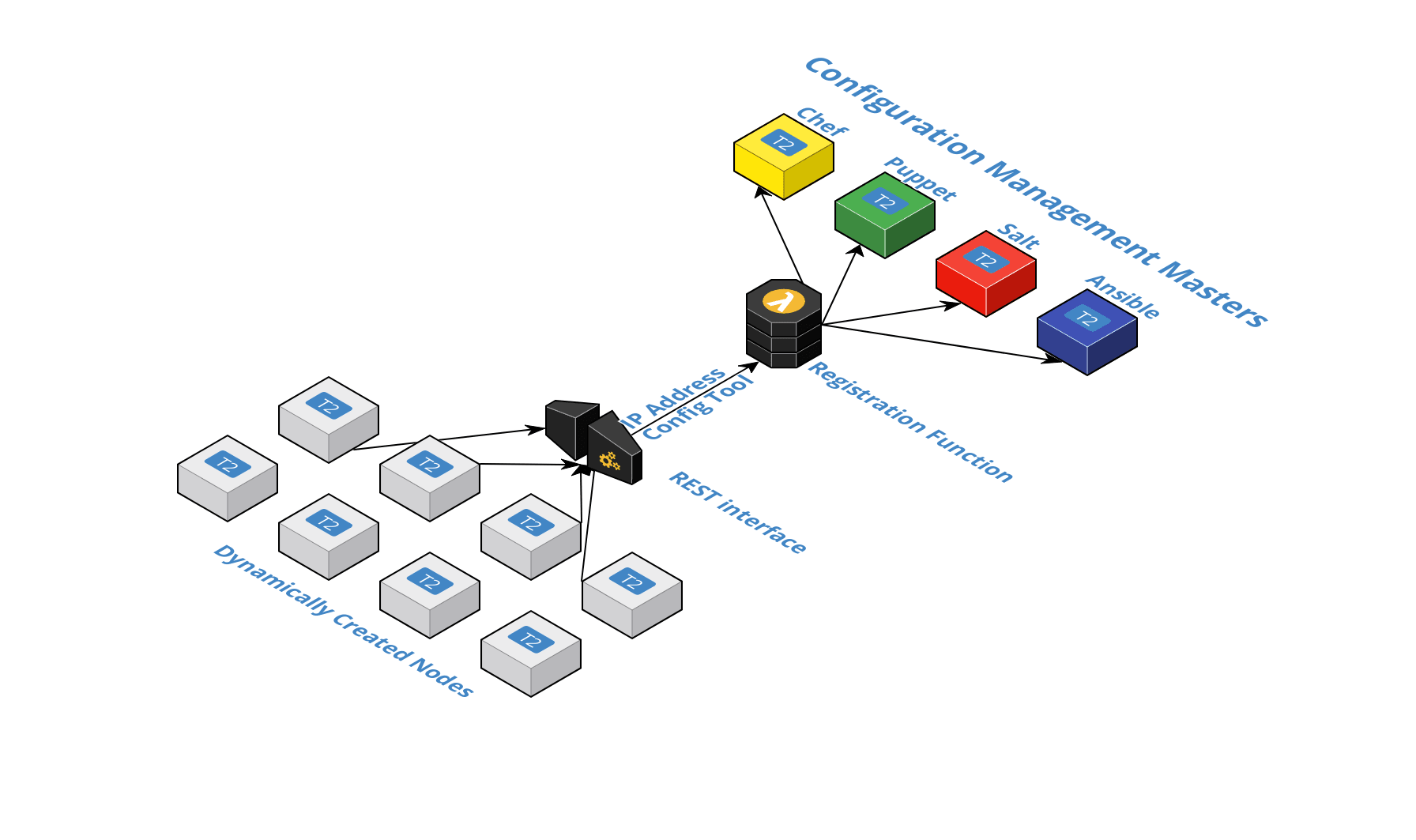

As the key metric gathered for these tests was time, it was crucial that the native “polling” functionality of many of these tools be ignored and instead, the custom “Registration API” that was built. This would forcefully identify minions on their respective master node and then kick off the tests immediately after any connectivity steps were done. This is better highlighted below:

Results

NOTE: While there was thorough effort to reduce any external factors impacting these findings, there is no guarantee that these are ironclad and should be treated as indicative rather than authoritative.

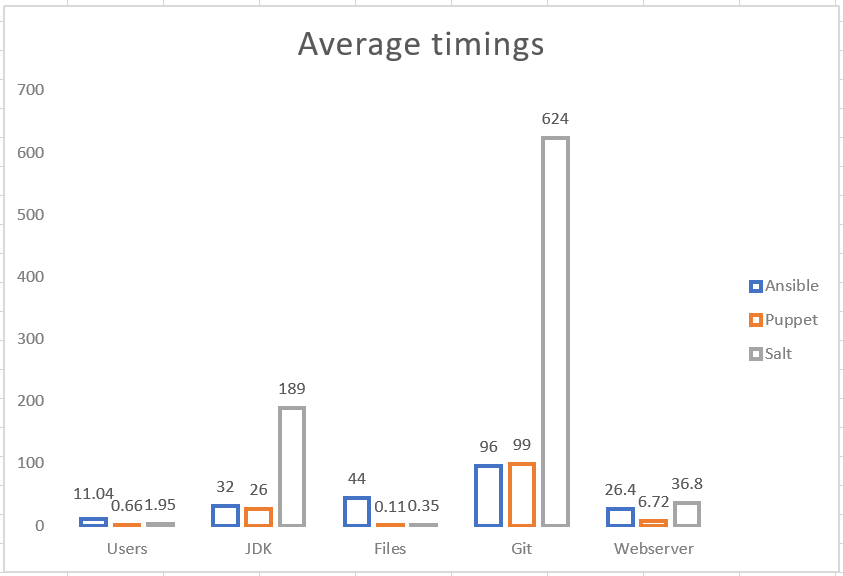

For this research I had a few tests I wanted to run via the configuration management. These tests were:

- Create 10 users

- Installing and setting up a JDK

- Creating 50 files

- Cloning a repo out with git

- Setting up a webserver

These tests were run on single slaves and multiple slaves at different times of the day as well on multiple cloud platforms. Interestingly, the only noticeable difference that appeared between AWS and Azure was the installation of the JDK which for some reason took twice as long on Azure.

However on the tests called out, we can see the results in seconds for the various tests averaged out in image above. While Puppet is almost always the fastest and often by quite a margin, Puppet is arguably the least accessible of the options I’ve gotten integrated with the framework (while acknowledging I couldn’t even get Chef into it). Ansible is certainly a very simple and lightweight tool and consistent in it’s performance, even if it’s not the fastest tool in this set of results. Salt however was quite inconsistent in it’s performance, in particular once multiple slaves were targetted at once.

Resources

As part of the work that had to be done for this research the following were generated: